120v Vs. 240v Ballast.

- Thread starter MOBee

- Start date

lemonjellow

Well-Known Member

240v lights should cut down actual energy used= savings on your light bill.

lemonjellow

Well-Known Member

in some cases as much as 50%.

K

Keenly

Guest

no no no no stop

im pretty sure if you get a 240 volt ballast your not going to be able to plug it in to a 120v outlet

if you live in the US get 120

if you live overseas, 240

im pretty sure if you get a 240 volt ballast your not going to be able to plug it in to a 120v outlet

if you live in the US get 120

if you live overseas, 240

no no no no stop

im pretty sure if you get a 240 volt ballast your not going to be able to plug it in to a 120v outlet

if you live in the US get 120

if you live overseas, 240

Dead on, majority of US house's are only 120 unless you know yours is 240 it is most likely 120v.

GrizzlyAdams

Well-Known Member

All US outlets are 120v unless its a stove, refrigerator etc. Europe is fine for 240v. 240v are a little more efficient than 120v.

240v lights should cut down actual energy used= savings on your light bill.

Do you have any links to back this claim up? Everything I have read has suggested the opposite. I saw a document that suggested that 240v may save a tiny amount on huge grow ops, but even that was heavily debated.in some cases as much as 50%.

Your 50% claim seems highly dubious to me.

BlueBalls

Well-Known Member

240v lights should cut down actual energy used= savings on your light bill.

Not even by a long shot.in some cases as much as 50%.

Doubling your voltage will half your amps, but the chargeable amount, watts, stays the same.

Power companies are not that dumb.

Your bill will not change.

MOBee

Active Member

so bills dont change? Ok.Not even by a long shot.

Doubling your voltage will half your amps, but the chargeable amount, watts, stays the same.

Power companies are not that dumb.

Your bill will not change.

Heard 240 was making ppl save some money!

lemonjellow

Well-Known Member

man read it again , i did not claim anything . it says it SHOULD and in SOME CASES .i was only stating what i had read .i never said anything was definate .i am thinking i would rather had you correct me in a decent manner , there was no need to get a attitude about it . someone just said useing 240v cuts down amps by half thats probley why i thought it would save money , i was under the impression thats how you paid for electricity was by amps . people make mistakes it happens and if it were the other way around i would not treat you with that attitude.Do you have any links to back this claim up? Everything I have read has suggested the opposite. I saw a document that suggested that 240v may save a tiny amount on huge grow ops, but even that was heavily debated.

Your 50% claim seems highly dubious to me.

man ,a simple correction would have sufficed.

man ,a simple correction would have sufficed.

fureelz

Active Member

IF you are charged by kilowatt per hour, it doesn't matter which one you use they will charge you the same.

1. Appliance wattage ÷ 1000 = kilowatts (kW)

2. Kilowatts × (time appliance is switched on in hours) = kilowatt hours (kWh)

3. Kilowatt hours x cost per KWh is what you pay for that appliance to run.

ex: 100W light bulb on for 24 hours where the cost per KWh for electricity = 6.26¢ (.0626)

(100 ÷ 1000) × 24 = 2.4 kWh used

2.4 kWh × 6.26¢ =15

to say that you got a 240v appliance was cheaper to consume electricity, is incorrect.

1. it is double the voltage of a standard us socket.

2. voltage = current in amps x resistance in ohms ...

3. watts = voltage x current amperers

4. watts / volts = amps

1. Appliance wattage ÷ 1000 = kilowatts (kW)

2. Kilowatts × (time appliance is switched on in hours) = kilowatt hours (kWh)

3. Kilowatt hours x cost per KWh is what you pay for that appliance to run.

ex: 100W light bulb on for 24 hours where the cost per KWh for electricity = 6.26¢ (.0626)

(100 ÷ 1000) × 24 = 2.4 kWh used

2.4 kWh × 6.26¢ =15

to say that you got a 240v appliance was cheaper to consume electricity, is incorrect.

1. it is double the voltage of a standard us socket.

2. voltage = current in amps x resistance in ohms ...

3. watts = voltage x current amperers

4. watts / volts = amps

I had no attitude, and was simply asking for some proof to back up what you were claiming. I thought I was extremely polite, especially since you were giving out incorrect information. At no point did I attack you personally, just the information you were giving out.man read it again , i did not claim anything . it says it SHOULD and in SOME CASES .i was only stating what i had read .i never said anything was definate .i am thinking i would rather had you correct me in a decent manner , there was no need to get a attitude about it . someone just said useing 240v cuts down amps by half thats probley why i thought it would save money , i was under the impression thats how you paid for electricity was by amps . people make mistakes it happens and if it were the other way around i would not treat you with that attitude.man ,a simple correction would have sufficed.

If you had made it more clear that you were just guessing, I would have been even more polite.

To add something a bit more useful to this thread, the following is from a thread on ICMag, which explains why you may save a tiny amount of money running 240 volts, and why it is usually a waste of time to do so unless you are running a massive grow op.

MadPenguin said:Yes, 240v will save you money but not a lot. IMO, not enough to overly justify getting all 240v gung ho.

It has to do with watt loss. Also called power loss, copper loss and winding loss. There is also such a thing as iron loss which is also known as core loss.

Watt/Winding/Power/Copper loss comes into play with a resistive load. (baseboard heaters, water heaters, clothes dryer)

Iron/Core loss comes into play with an inductive load. (ballasts, motors, anything with a ferrous core)

It would probably help to know the difference between a resistive load and an inductive load but you guys can google it if you really want to know. If your even remotely interested in electricity, it would benefit you to know the difference between the two.

Iron loss is due to 2 things. Hysteresis and eddy currents.

Copper loss = Energy dissipated in the form of heat due to the resistance of the wire or element.

Hysteresis = Magnetic friction in the core.

Eddy Currents = Electric currents induced in the core.

This is why digital ballasts are INSANELY more efficient than Magnetic "Core&Coil" ballasts. I find it funny when 240v zealots are running all mag ballasts....

Actually, <whatever> loss is energy being dissipated in the form of wasted heat, thus not utilized by the source load (because it never gets there, ie - bleeds off as heat).

That's why resistive loads are remarkably inefficient. You use an element with an insanely high amount of resistance (tungsten filament in a light bulb) and when you apply current to it, the electrons have an extremely hard time moving through it so the high resistance material gets insanely hot (due to friction between electrons and tungsten). Then it starts to glow, thus providing light. Did you know that the temperature of a light bulb filament is the same temperature as the sun?

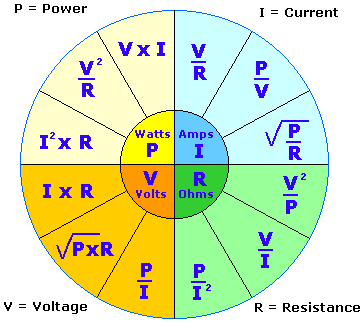

Math is an indisputable way to confirm Copper loss by way of Ohms Law.

P = I² x R

We'll use one 1000w ballast as an example. Lets just say that this ballast draws 8.3A @ 120v and 4.16A @ 240 (Ohms Law again).

We ran a dedicated #14 AWG circuit to feed this ballast. It's a multitap ballast, meaning it can run at 240 or 120. What should I use? Doesn't really matter but since we are talking about copper loss, lets figure it out. The distance from circuit breaker to receptacle is 78 feet. So that's 78' of 14/2 romex.

I go to Chapter 9 Table 8 in the NEC. I find out that 1000' of #14 CU has a resistance of 3.07 Ω. So that means our 78' has a resistance of 0.24 Ω

120v circuit:

(8.3 x 8.3) x 0.24 = 16.53 watts lossed

240v circuit:

(4.16 x 4.16) x 0.24 = 4.15 watts lossed

Holy crap! That's a lot right? Wrong.

Take the difference of the two to see what we are "missing out on" by running at 120v, which is 12.38 watts.

Savings = 12.38w x 12h x 11centsperhour / 1000 = 0.0163416

You save a penny and a half per day on a 12 hour bloom cycle billed @ 11cents pKWh by running @ 240v...

I'm gonna switch right now!Not.

Lets put it another way. If you really love wriglys Juicy Fruit gum and it costs 1.29 at your local supermarket, after running your light @ 240v for

947 HOURS

you can get a "free" pack of gum. Sweet!!!! Juicy fruit, is gonna move ya'. It's a song, sing it loud, the taste is gonna move ya!

BTW, that's a bad Ohms Law wheel. Didn't notice til just now. "V" should be "E". As in Electromotive Force